So you want to do reflection?

Scala 2.10 will come with a Scala reflection library. I don't know how it fares when it comes to reflecting Java -- I'll look into it when I have time. For now, my hands are overflowing with all there is to learn about scala.reflect.So, first warning: this is not an easy library to learn. You may gather some recipes to do common stuff, but to understand what's going on is going to take some effort. Hopefully, by the time you read this Scaladoc will have been improved enough to make the task easier. Right now, however, you'll often come upon types which are neither hyperlinked, nor have path information on mouse-over, so it is important to learn where you are likely to find them.

It will also help to explore this thing from the REPL. Many times as I worked my way towards enlightenment (not quite there yet!), I found myself calling methods on the REPL just to see what type it returned. At other times, however, I stumbled upon exceptions where I expected none! As it happens, class loaders play a role when reflecting Scala -- and REPL has two different mirrors.

There's also a difference between classes loaded from class files versus classes that have been compiled in memory. Scala stores information about the type from Scala's point of view in the class file. In its absence (REPL-created classes), not all of it can be recomposed.

Bottom line: having the the code type check correctly is not a guarantee of success, when it comes to reflection. Even if the code compiles, if you did not make sure you have the proper mirror, it will throw an exception at run time.

In this blog post, I'll try to teach reflection basics, while building a small JSON serializer using Scala reflection. I'll also go, in future posts, through a deserializer and a serializer that takes advantage of macros to avoid slow reflection calls. I'm not sure I'll succeed, but, if I fail, maybe someone will learn from my errors and succeed where I failed.

As a final note: don't trust me! I'm learning this stuff as I go, and, besides, it isn't set into stone yet. Some of what you'll see below is already scheduled to be changed, other things might change as result of feedback from the community. But every piece of code will be runnable on the version I'm using -- which is something between Scala 2.10.0-M4 and whatever comes next. I'm told, even as I write this, that Scala 2.10.0-M5 is around the corner, and it will provide functionality I'm missing, and fix bugs I've stumbled upon.

Warning: I'm writing this with 2.10.0 ready but not yet announced, and I've just became aware that, at the moment, reflection is not thread-safe. See issue 6240 for up-to-date information.

The Reflection Libraries

When Martin Odersky decided to write Scala's reflection library, he stumbled upon a problem: to do reflection right -- that is, to accomplish everything reflection is supposed to accomplish -- he'd need a significant part of the compiler code duplicated in the standard library.The natural solution, of course, would be moving that code to the standard library. That was not an enticing prospect. It happened before, with some code related to the XML support, and the result speaks for itself: the standard library gets polluted with code that makes little sense outside the compiler, and the compiler loses flexibility to change without breaking the standard library API. As time passes, code would start to be duplicated so it could be changed and diverge, and the code in the standard library would become useless and rot.

So, what to do? Odersky found the solution in a famous Scala pattern: the cake pattern. Scala reflection is a giant multi-layered cake. We call these layers universes, and in them we find types, classes, traits, objects, definitions and extractors for various things. A simple look at what the base universe extends gives us a notion of what's in it: Symbols with Types with FlagSets with Scopes with Names with Trees with Constants with AnnotationInfos with Positions with TypeTags with TagInterop with StandardDefinitions with StandardNames with BuildUtils with Mirrors.

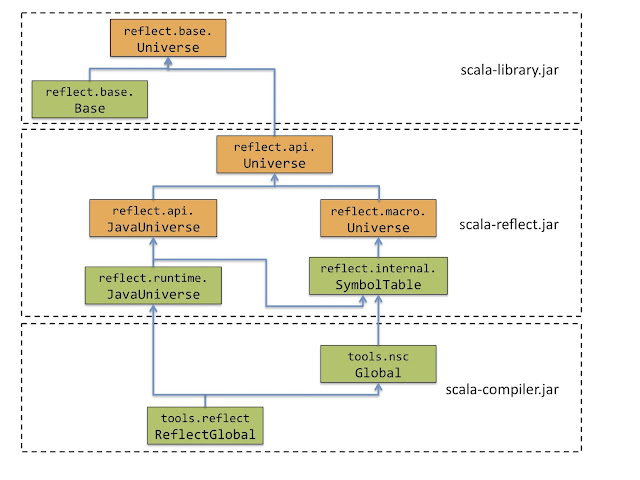

The base layers provides a simple API, with type members defined by upper bounds where minimal functionality is provided. That base layer is available in the standard library, shared by all users of reflection API: scala.reflect.base.Universe. This provides replacements for the now deprecated Manifest and ClassManifest used elsewhere in the standard library, and also serves as a minimally functional implementation of reflection, available even when scala-reflect.jar is not referenced.

The standard library also has a bare bones implementation of that layer, through the class scala.reflect.base.Base, an instance of which is provided as scala.reflect.basis, in the scala.reflect package object.

Take a moment and go over that again, because it's a common pattern on the reflection library. The instance basis is a lazy val of type Universe, whose value is an instance of class Base.

Upon this layer we can find other layers that enrich that API, providing additional functionality. Two such layers can be found on a separate jar file, scala-reflect.jar. There's a base API on package scala.reflect.api, which is also called Universe. This API is inherited by the two implementations present in this jar: the java-based runtime scala.reflect.api.JavaUniverse, and scala.makro.Universe, used by macros. By the way, the name of this last package will change, at which point this link will break. If I do not fix it, please remind me in the comments.

Now, notice that these are APIs, interfaces. The actual implementation for scala.reflect.api.JavaUniverse is scala.reflect.runtime.JavaUniverse, available through the lazy val scala.reflect.runtime.universe (whose type is scala.reflect.api.JavaUniverse).

At any rate, the JavaUniverse exposes functionality that goes through Java reflection to accomplish some things. Not that it is limited by Java reflection, since the compiler can inject some information at compile time, and keeps more precise type information stored on class files. You'll see how far it can go in the example.

There are more layers available in the compiler jar, but that's beyond our scope, and more likely to change with time. Look at the image below, from the Reflection pre-SIP for an idea of how these layers are structured.

And that's what I meant by reflection libraries in the heading: there are multiple versions of this library, exposing different amounts of API. For example, at one point the Positions API was not available on the standard library, something which was latter changed through feedback from the community. On the compiler, scala.tools.nsc.Global extends SymbolTable with CompilationUnits with Plugins with PhaseAssembly with Trees with Printers with DocComments with Positions, which exposes much more functionality (and complexity) than the layers above.

What's it for, anyway?

So, what do we do with a reflection library? If you think of Java reflection, you'll end up with a rather stunted view of what reflection should do, given how limited it is. In truth, Java reflection doesn't even reflect the Java language, which is a much more limited language than Scala.

The Scala Reflection pre-SIP suggests some possibilities, though not all are contemplated by what's available on Scala 2.10.0:

- Runtime reflection where one explores runtime types and their members, and gains reflective access to data and method invocations.

- Reification where types and abstract syntax trees are made available at runtime.

- Macros which use reflection at compile time to access their context and produce trees and types.

It goes on to describe some questions that must be answered by the reflection library:

- What are the members of a certain kind in a type?

- What are the types of these members as seen from an instance type?

- What are the base classes of a type and at which instance types are they inherited?

- Is some type is a subtype of another?

- Among overloaded alternatives of a method, which one best matches a list of argument types?

That doesn't mean we can open a class and rewrite the code in its methods -- though macros might allow that at some point after 2.10.0. For one thing, bytecode doesn't preserve enough information to reconstruct the source code or even the abstract syntax tree (the compiler representation of the code).

A class file contains a certain amount of information about the type of a class, and an instance of a class just knows what its own class is. So, if all you have is an instance, you can't gain knowledge through reflection about the type parameters it might have. You can, however, use that information at compile time through macros, or preserve it as values for use at run time.

Likewise, though you cannot recover the AST (abstract syntax tree) of a method, but you can get the AST for code at compile time, modify it with macros, or even parse, compile and execute code at run time if you use what's available on scala-compiler.jar.

Speaking of macros, I'd like to point out the suggestion that "reflection" might be used at compile time. Reflection is usually defined as a run time activity. However, the API used by macros (or the compiler) at compile time will be mostly the same as the one used by programs at run time. I predict Scala programmers will unassumingly talk about "reflection at compile time".

Speaking of macros, I'd like to point out the suggestion that "reflection" might be used at compile time. Reflection is usually defined as a run time activity. However, the API used by macros (or the compiler) at compile time will be mostly the same as the one used by programs at run time. I predict Scala programmers will unassumingly talk about "reflection at compile time".

"This is all getting a little confusing..."

Ok, so let's see some code to show you what I'm talking about. I'm not going to call methods or create instances, since that requires more information than we have seen so far, and, besides, you could do that stuff with Java reflection already.

Let's start with a simple example. We'll get the type of a List[Int], search for the method head, and then look at its type:

scala> import scala.reflect.runtime.universe._

import scala.reflect.runtime.universe._

scala> typeOf[List[Int]]

res0: reflect.runtime.universe.Type = List[Int]

scala> res0.member(newTermName("head"))

res1: reflect.runtime.universe.Symbol = method head

scala> res1.typeSignature

res2: reflect.runtime.universe.Type = => A

The initial import made the runtime universe, the one that makes use of java reflection, available to us. We then got the the type we wanted with typeOf, which is similar to classOf. After that, we got a handle to the method named head, and, from that handle (a Symbol), we got a Type again.

Note that the type being returned by the method is "A". It has not been erased, but, on the other hand, is not what we expected from List[Int]. What we got was the true type of head, not the type it has on a List[Int]. Alas, we can do the latter quite easily:

scala> res1.typeSignatureIn(res0) res3: reflect.runtime.universe.Type = => Int

Which is what we expected. But, now, let's make things a bit more general. Say we are given a instance of something whose static type we know (that is, it isn't typed Any or something like that), and we want to return all non-private methods it has whose return type is Int. Let's see how it goes:

scala> def intMethods[T : TypeTag](v: T) = {

| val IntType = typeOf[Int]

| val vType = typeOf[T]

| val methods = vType.members.collect {

| case m: MethodSymbol if !m.isPrivate => m -> m.typeSignatureIn(vType)

| }

| methods collect {

| case (m, mt @ NullaryMethodType(IntType)) => m -> mt

| case (m, mt @ MethodType(_, IntType)) => m -> mt

| case (m, mt @ PolyType(_, MethodType(_, IntType))) => m -> mt

| }

| }

intMethods: [T](v: T)(implicit evidence$1: reflect.runtime.universe.TypeTag[T])Iterable[(reflect.runtime.universe.MethodSymbol, reflect.runtime.universe.Type)]

scala> intMethods(List(1)) foreach println

(method lastIndexWhere,(p: Int => Boolean, end: Int)Int)

(method indexWhere,(p: Int => Boolean, from: Int)Int)

(method segmentLength,(p: Int => Boolean, from: Int)Int)

(method lengthCompare,(len: Int)Int)

(method last,=> Int)

(method count,(p: Int => Boolean)Int)

(method apply,(n: Int)Int)

(method length,=> Int)

(method hashCode,()Int)

(method lastIndexOfSlice,[B >: Int](that: scala.collection.GenSeq[B], end: Int)Int)

(method lastIndexOfSlice,[B >: Int](that: scala.collection.GenSeq[B])Int)

(method indexOfSlice,[B >: Int](that: scala.collection.GenSeq[B], from: Int)Int)

(method indexOfSlice,[B >: Int](that: scala.collection.GenSeq[B])Int)

(method size,=> Int)

(method lastIndexWhere,(p: Int => Boolean)Int)

(method lastIndexOf,[B >: Int](elem: B, end: Int)Int)

(method lastIndexOf,[B >: Int](elem: B)Int)

(method indexOf,[B >: Int](elem: B, from: Int)Int)

(method indexOf,[B >: Int](elem: B)Int)

(method indexWhere,(p: Int => Boolean)Int)

(method prefixLength,(p: Int => Boolean)Int)

(method head,=> Int)

(method max,[B >: Int](implicit cmp: Ordering[B])Int)

(method min,[B >: Int](implicit cmp: Ordering[B])Int)

(method ##,()Int)

(method productArity,=> Int)

scala> intMethods(("a", 1)) foreach println

(method hashCode,()Int)

(value _2,=> Int)

(method productArity,=> Int)

(method ##,()Int)

Please note the pattern matching above. Tree, Type and AnnotationInfo are ADTs, and often you'll need pattern matching to deconstruct or to obtain a more precise type. For the record, the pattern matches above refer to methods without parameter lists (like getters), normal methods, and methods with type parameters, respectively.

EDIT: A recent discussion indicates that pattern matching with types may not be reliable. Instead, the code above should have read like this:

def intMethods[T : TypeTag](v: T) = {

val IntType = typeOf[Int]

val vType = typeOf[T]

val methods = vType.members.collect {

case m: MethodSymbol if !m.isPrivate => m -> m.typeSignatureIn(vType)

}

methods collect {

case (m, mt @ NullaryMethodType(tpe)) if tpe =:= IntType => m -> mt

case (m, mt @ MethodType(_, tpe)) if tpe =:= IntType => m -> mt

case (m, mt @ PolyType(_, MethodType(_, tpe))) if tpe =:= IntType => m -> mt

}

}

I decided to put up this edit as quickly as possible, so I'm just posting the revised version of the method, instead of revising the post with usage, etc.

To finish up the examples, let's see some manipulation of code:

scala> import scala.tools.reflect.ToolBox

import scala.tools.reflect.ToolBox

scala> import scala.reflect.runtime.{currentMirror => m}

import scala.reflect.runtime.{currentMirror=>m}

scala> val tb = m.mkToolBox()

tb: scala.tools.reflect.ToolBox[reflect.runtime.universe.type] = scala.tools.reflect.ToolBoxFactory$ToolBoxImpl@5bbdee69

scala> val tree = tb.parseExpr("1 to 3 map (_+1)")

tree: tb.u.Tree = 1.to(3).map(((x$1) => x$1.$plus(1)))

scala> val eval = tb.runExpr(tree)

eval: Any = Vector(2, 3, 4)

That's code by Antoras, by the way, from this answer on Stack Overflow. While we do not go into any manipulation of the parsed code, we can actually decompose it into Tree sub-components, and manipulate it.

End of Part 1

There's still a lot to see! We have touched on some concepts like Type and Tree, but without going into any detail, and there was a lot going on under the covers. That will have to wait a bit, though, to keep these posts at manageable levels.

To recapitulate, Scala 2.10 will come with a Scala reflection library. That library is used by the compiler itself, but divided into layers through the cake pattern, so different users see different levels of detail, keeping jar sizes adequate to each one's use, and hopefully hiding unwanted detail.

The reflection library also integrates with the upcoming macro facilities, enabling enterprising coders to manipulate code at compile time.

We saw the base and runtime layers, mentioned a bit of other layers, then went into some reasons for wanting a reflection library, and some tasks such a library ought to be able to do.

Finally, we looked at bits of a REPL session, where we used the reflection library to find out information we could never have found through plain Java reflection. We also saw a bit of code that made parsing and running Scala code at run time pretty easy.

On part 2, we'll look at the main basic concepts of Scala Reflection's Universe, and how they relate to each other. We'll then proceed to the promised JSON serializer. We'll follow on this series with the deserializer, and end up by taking advantage of macros to avoid reflection at run time.

See you then!

This comment has been removed by the author.

ReplyDeleteHmm, it seems that we really need to introduce `Type.resultType`, so that people don't write these huge pattern matches. Thanks for the feedback and looking forward to your next installments!

ReplyDeleteupd. You need to use =:= in order to accomodate for aliases. If a method returned a type, which is an alias for Int, the first version of the code wouldn't print it out.

Great post. very useful!

ReplyDeleteI'm trying to add some scala 2.10 reflection magic for lift-json.

https://github.com/iron9light/lift-json

Wish you could give me some advice.

IL

Here is my attempt: https://bitbucket.org/jaroslav/scala-macro-serialization/src

ReplyDeleteIt packs and unpacks MsgPack (binary json) and packs Json, unpacking Json not implemented yet.

Still in experimental phase, far from production quality.

scala.makro.Universe is now scala.reflect.macros.Universe (to be corrected in post)

ReplyDeleteThis comment has been removed by a blog administrator.

ReplyDelete